We don't learn from experience because we refuse to accept the cause and effect loops that are there in plain sight.

Cognitive dissonance - when there is a discrepancy between reality as observed and one's worldview -- the perception of reality reality as constructed or conceived over one's lifetime -- people experience a sense of discomfort . Social Psychologists call this uncomfortable feeling "cognitive dissonance." Theorists hypothesize that people will tend to reconstruct their perceptions of reality in ways that will reduce the dissonance. cognitive dissonance appears to motivate a state of tension that occurs whenever a person holds two psychologically inconsistent cognitions in mind.

Leon Festinger coined the term after observing a cult of people who who believed they were recipients of a "revelation" from God about the end of times. The date of the apocalypse had been revealed to them through a prophet in exact terms: December 21, 19XX. Festinger stayed close to the group as the fateful day approached. When the end of times did not appear, members displayed a surprising conviction that their belief system had not really been disproven. Rather, they tended to change their memories about what the prophet had said, and to find ways in which the revelation had indeed come true. Their belief system remained intact. Festinger coined the term "cognitive dissonance" as he noted that members appeared to find admitting they'd been mistaken was too much of a cost to bear n light of the emotional and life investments each had made in the group. In a nutshell, cognitive dissonance appears to be such a powerful motivator that people will distort their perception of reality in order to lessen or mitigate the discomfort.

Selective Perception - First, we see that individuals faced with an array of disparate information will "select" and attend to the tidbits of data that support their worldview, or set of expectations, and will ignore information that does not fit their expectations.

Confirmation Bias -- Aronson notes that CD often leads to a "confirmation bias," characterized by both selective perception and selective memory.

Selective Perception - First, we see that individuals faced with an array of disparate information will "select" and attend to the tidbits of data that support their worldview, or set of expectations, and will ignore information that does not fit their expectations.

Selective and Distorted Memory -Second, studies of memory have shown that, over time, peoples' memories tend to change in ways that best "fit" their self-concept and general worldview.

T&A quote: p. 6 - "self serving distortions of memory kick in and we forget of distort past events." We gradually come to believe our own lies, the more we tell them. In fact, obvious misstatements of truth don't begin as full blown lies. First a little, then a little more, and then more still. Quicksand is a good metaphor for this. You get sucked in very gradually.

They cite LBJ, pg 7 - "a president who has justified his actions to himself, believing that he has the truth, becomes impervious to self-correction." "LBJ "had a fantastic capacity to persuade himself that the 'truth' which was convenient for the present was the truth..."

Self-Justification -- CD can also be employed to explain the strong tendency for people to engage in "self-justification." Tavris & Aronson: p. 29: "Dissonance is bothersome under any circumstance, but it is most painful to people when an important element of their self-concept is threatened -- typically when they do something that is inconsistent with their view of themselves."

T&A note (p 20) that the "confirmation bias sees to it that no evidence -- the absence of evidence -- is evidence for what we believe. p 2 - they note that "throughout his presidency GWB was the epitome of a man for whom even irrefutable evidence could not pierce his mental armor of self-justification." They note he was wrong in his assertion of WMD, a Saddam-Al Qaeda link, his estimate in the cost of the war, and his expectation that Iraqis would welcome the arrival of American soldiers with a joyful reception.

Lord Molson, British politician: (p. 17) --- "I will look at any additional evidence to confirm the opinion to which I have already come."

Many polls showed that many Americans believed that WMD had been found in Iraq, for months and even years since the military had concluded that there was nothing there to find. (Find examples.)

Hypocrisy: Aldous Huxley said "there is no such thing as a conscious hypocrite." (p 5). they note Ted Haggard did not dwell on the hypocrisy of railing against homosexuality while participating in sexual relationship with a male prostitute.

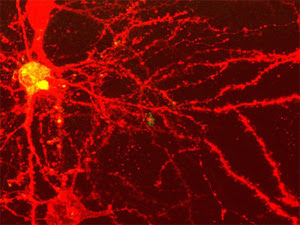

We now find that the brain itself appears to shut down in the presence of unexpected or dissonant information. see Dew Westen article: The Neural Basis of Motivated Reasoning. it appears that biases in perception are built into the way the brain works.

Once a decision is made, there are multiple mechanisms available for people to use to justify the rightness of the choice.

T&A point poignantly to the end of Casablanca. Despite Rick's admonition that "maybe not today, maybe not tomorrow, but soon, and for the rest of your life..." Elsa would regret making the wrong choice, staying in Casablanca with Rick. Noble ending, but not a correct observation of human nature. T&A postulate that Elsa would come to believe strongly in the rightness of her choice, no matter which direction she'd chosen. -- the drive for self-justification is just that strong.

Look through the lens of dissonance theory -- Several studies have shown that the judgments of "experts" in many fields are no more sound than those of randomly chosen people. The difference is that the experts are supremely confident in their view, while others admit to doubts.

Moreover, the experts are far more susceptible to distortions of their perceptions due to CD because their professional and personal reputation is at stake, unlike the normal person. This suggests, of course, that experts may be less likely to admit mistakes and learn from experience than non-experts.

T&A - p 36 - story of Jeb Magruder, Watergate culprit - show he was a good and decent person entering into his relationship with Liddy and the White House. -- slowly, a little at a time, Magruder went along with dishonest and illegal actions - justifying each one as he did.

Milgram's subjects followed the same pattern. Most would refuse to deliver the maximum jolt when they entered the situation, but when slowly building up the voltage - they seemed to justify each one because it was not much more than the last. Milgram experiment widely cited as showing that ordinary people will do vile and despicble thing when convinced that they are doing something for the greater good.

pg. 43 - "Democrats will endorse an extremely restrictive welfare proposal, one usually associated with Republicans, if they think it has been proposed by the Democratic Party." page 43 p 43 - we are as unaware of our blind spots as fish are unaware of the water they swim in. these biases lead to wrong decisions because of the confirmation bias, and to continue to justify the decision in order to avoid cognitive dissonance.

Merck funded the drug Vioxx. their own scientists did not uncover dangers, despite available data. Only when independent scientist, neither funded by Merck, nor having their own reputations at stake, was evidence of risks associated with the drug exposed. Likely, Merck's investigators did not lie, not did they "knowingly" distort their findings. Rather, they fell pry to cognitive biases that beset us all.

T&A p. 51 Similarly, a group of scientists paid by a group of parents of autistic children produced a study showing a positive correlation between childhood autism and childhood vaccines. 6 years later, 10 of 13 researchers retracted some of the results, citing a conflict of interest by the lead author. Since then, five studies have produced no causal relationship between vaccines and autism.

Us versus not-US -- pg 59 - "When things are going well, people feel pretty tolerant of other cultures and religions ... but when they are angry, anxious, or threatened, the default position is to activate their blind spots..." in a manner known as ethnocentrism... the belief that out own culture, nation or religion is superior to all others. stereotypes are bolstered by the self-justification bias. prejudice - once accepted to a small degree- is difficult to dislodge - cherry-picked pieces of data justify the initial belief, and prejudice grows a little bit at a time. --

Hitler's henchman Albert Speer - wrote in his memoirs: "people who turn their backs on reality are soon set straight by the mockery and criticism of those around them, which makes them aware they have lost credibility. In the Third Reich there were no such correctives, especially for those who belonged to the upper stratum. To the contrary, every self-deception was multiplied as in a hall of distorting mirrors, becoming a repeatedly confirmed picture of a fantastical dream world which no longer bore any relationship to the grim outside world. In those mirrors I could see nothing but my own face reproduced many times over." T&A refer to memory as "the self-justifying historian." pg 69

p 69 - most of us neither intend to lie nor intentionally deceive. Rather, we are self-justifying. "All of us, when we tell our stories, add details and omit inconvenient facts; we give the tail a small, self-enhancing spin." Reinforced for the story, we embellish it even more up on the next telling. "At the simplest level, memory smoothes out the wrinkles of dissonance by enabling the confirmation bias to hum along., selectively causing us to forget the discrepant information about beliefs we hold dear."

"if mistakes were made, memory helps us remember that they were made by someone else." pg 70 Anthony Greenwald refers to "the totalitarian ego" that ruthlessly destroys information it doesn;'t want to hear. [Find this XXX]

pg 71: great quote: "Confabulation, distortion, and plain forgetting are the foot soldiers of memory, and they are summoned to the front lines when the totalitarian ego wants to protect us from the pain and embarrassment of actions we took that are dissonant with our core self-images."

Nietzsche: "'I have done that, says my memory. 'I cannot have done that,' says my pride, and remains inexorable. Eventually--memory yields."

T&A page 71 Memory is reconstructive - pieces of experience are rebuilt from different parts of the brain. Much like a message is reconstructed over the internet from bytes that travel here through disparate pathways. As we rebuild the core memory, we are subject to the bias of our own theories. Upon repeated rebuilding, our story begins to look more and more as we'd have liked it to be. this relates to the "source confusion" phenomenon ---

When people learn that their memories are wrong, they are stunned.

We shape memories to fit our life story, rather than vice versa. See Barbara Tversky and Elizabeth Marsh study -- they show we "spin the stories of our lives." memories change to fit the story -- this happens gradually, over time. generally, memories change in the direction of self-enhancement.

See story of the book Fragments, by Binjamin Wilkomirski. He created a false biography, but seem to believe in the truth of his story. The book tells of his experiences in the Nazi death camps, despite...... there was a major problem with the story, though... as far as historians know, there were no "orphanages" in the Nazi concentration camps.

from Nasim Nicholas Taleb's The Black Swan: The Impact of the Highly Improbable. Memory is "a self-serving dynamic revision machine: you remember the last time you remembered the event and, without realizing it, change the story at every subsequent remembrance." (italics by original author) "we pull memories along causative lines, revising them involuntarily and unconsciously. We continuously re-narrate past events in the light of what appears to make what we think of as logical sense..."

He calls this "reverberation." memory corresponds to the strengthening of connections from an increase in brain activity in a given sector of the brain-- the more activity, the stonger the memory. The brain works this way: it creates narratives ;; and when a memory does not fit the narrative - we fix it so that it does. dreams are forms of narrative --

Taleb says that the story of the Maginot Line is suggestive. The French did learn from their experiences of WW1. They just "learned too specifically." In the run-up to WW2, they readied themselves to protect their county from a threat similar to that it faced two decades before.

pg. 50 --- we have a tendency to "tunnel"... we focus on a few well-defined sources of uncertainty, on too specific a list of [possibilities] at the expense of unimagined possibilities. This is why we did not expect the 9/11 style attack.

pg. 55 - Taleb describes what he calls "naive empiricism" -- "we have a tendency to look for instances that confirm our story and our vision of the world-- these instances are always easy to find... You take past instances that corroborate your theories and you treat them as evidence."

pg 62 - The Narrative fallacy -- our vulnerability to over interpretation and our predilection for compact stories over raw truth." note how truths are carried across generations in the form of mythology.

pg 84 -- the way to avoid the ills of the narrative fallacy is to favor experimentation over story-telling, experience over history, and clinical knowledge over theories.

pg 119 - "... we are explanation-seeking animals who tend to think everything has an identifiable cause and grab the most apparent one as the explanation. Yet there may not be a visible because; to the contrary, frequently there is nothing, not even a spectrum of possible explanations." Back to T&A: many of these false memories are not the result of calculated self-interest, but of self-persuasion. "The weakness of the relationship between accuracy and confidence is one of the best-documented phenomena in the 100-year history of eyewitness memory research." pg 108 The first interpretation of events is hard to break from. much evidence of this in the interrogation literature. IN this day of DNA evidence showing past cases to be mistakenly decided, s surprising number of prosecutors in these case refuse to admit that verdicts were wrong. "We impulsively decide we know what happened and then fit the evidence to support our conclusion, ignoring and discounting evidence that contradicts it." page 135 T&A When the evidence does not fit, the we tend to simply omit Many court-room based studies have shown that jurors will often make up their minds early in the process, and then selectively accept or reject the validity of evidence as it fits or contradicts their initial inclination. that is, when the evidence does not fit the story, they mitigate the importance of the evidence. In studies of police investigations: The confirmation bias sees to it that the prime suspect beacomes the only suspect. pg 137

"Once we have placed out bets, we don't want to entertain any information that casts doubt on that decision."

Example of spiraling self-justification: The crisis in Iran 1979 - Americans viewed their country as being attacked without provocation. So they were mad at the shah, Americans reacted, "what does that have to do with us?"

what started the hostage crisis? Each side blames the other. To Iranians, the Americans started the process in t1953 when the US aided a coup that deposed a democratically elected leader, Mohammed Mossedegh, and installed the Shah. Iranians blamed America as the shah accumulated great wealth, and used his secret police, the SAVAK, to put down dissent in reportedly brutal member.

the engine of the back-and-forth downward spiral of blame and connotation... self justification.

General Westmoreland said during the Vietnam War: "The Oriental doesn't put the same high price on life as does the Westerner. Life is plentiful. Life is cheap in the Orient." More self justification and stereotyping -- see Boston Globe 7-20-05,

2 ways to reduce cognitive dissonance. First is to say that if we do it, it must not be torture. "we do not torture," said GWB, "we use an alternative set of procedures." Second is to state that that the victims of torture simply got what they deserve.

"When George bush announced that he was launching a "crusade" against terrorism, most Americans welcomed the metaphor. In the West, crusade has positive connotations, associated with the good guys" -- think of the Billy Graham crusades... Batman and Robin, the Caped Crusaders.

Not so for most Muslims. The first Crusade of 1095 is still remembered. At that time, an army of Christians slaughtered the inhabitants of Jerusalem. to Muslims, it "might just as well have occurred last month, it's that vivid in the collective memory." Pg 206

in the 2nd presidential debate with John Kerrey October 8, 2004" Bush was asked for "three instances in which you came to realize you had made a wrong decision, and what you did to correct it." His response: "[When people ask about mistakes] they’re trying to say "Did you make a mistake in going to Iraq?" And the answer is 'Absolutely not.' It was the right decision....Now, you asked what mistakes. I made some mistakes in appointing people, but I'm not going to name them. I don't want to hurt their feelings on national TV."

Lao Tzu:

A great nation is like a great man:

When he makes a mistake, he realizes it.

Having realized it, he admits it.

Having admitted it, he corrects it.

He considers those who point out his faults

as his most benevolent teachers.